Journal of the NACAA

ISSN 2158-9429

Volume 13, Issue 1 - June, 2020

PRACTICAL EVALUATION TOOL AND LOGIC MODEL FRAMEWORK FOR EXTENSION EDUCATORS AND GRANT WRITERS

- Majumdar, A. , Extension Professor, Department of Entomology and Plant Pathology, Alabama Cooperative Extension System, Auburn University

Chambliss, A., Program Assistant & Outreach Administrator, Alabama Cooperative Extension System, Auburn University

Fadamiro, H. , Professor, Department of Entomology and Plant Pathology, Auburn University

Balusu, R.R., Research Fellow III, Department of Entomology and Plant Pathology, Auburn University

ABSTRACT

Extension evaluation is a relatively young field of knowledge. Much of what we know today in program evaluation comes from the public education and health fields resulting in difficulties in adapting the methods to agricultural Extension. This article is based on long-term evaluation experiences of the authors that established a strong feedback system for two large grant-based programs in the Alabama Cooperative Extension System (ACES). Discussion on steps to evaluation strategy and electronic polling systems for data collection should appeal to new and experienced Extension educators. Several ideas for reporting are also included along with thoughts on improving response rates and capacity-building.

INTRODUCTION

Extension in the Information Age

Agricultural Extension or simply ‘Extension’, as we know it today, is not just a frantic set of activities consisting of field visits, workshops, emails, phone calls, or text messages for information transfer; Extension educators must have educational process competencies for success (Ghimire and Martin, 2011). The new model of Extension involves a systematic effort consisting of systems-thinking for agricultural issues, program strategy and management, communication and marketing plan, evaluation and reporting program outcomes (Kluchinski, 2014; Radhakrishna and Brown, 2010). In other words, Extension personnel must document public value for their efforts and justify the costs (Stup, 2003). With the changing role of Extension and requirement of funding agencies, new Extension employees often struggle to develop written plans for designing basic educational methods, communication/marketing systems, and evaluation strategy within the Logic Model framework (Braverman and Engle, 2009; Radhakrishna and Brown, 2010).

An evaluation tool should appeal to new and experienced educators looking for ideas regarding evaluative thinking in Extension programing. The modified Logic Model proposed here could serve as a template for early-stage grant planning by Extension personnel that is connected to a realistic budget. The overall goal of this article is to make evaluation a fun and practical activity with useful data sharing with administrators and peers that captures public value (Osborne and Gaebler, 1992; Kalambokidis, 2004).

Relevance of Extension Evaluations

The evaluation tool is based on collective experiences developing and implementing over 50 grower-centered and evaluation-focused collaborative grants, 16 capacity building initiatives, and direct training by over 1,000 Extension educators in the past 12 years at ACES. Allen (2009) defined evaluation is "like a good story, it needs some (qualitative) evidence to put the lesson in context, and some (quantitative) facts and figures that reinforce the message." Based on our experience, we define Extension evaluation as PLANNED activities that utilize time, human, and/or capital resources to determine program success and show public value. Program evaluation has become a standard requirement for many long-term and integrated grant projects that provide equal emphasis to research, educational curricula, project monitoring and documentation of major impacts. Evaluations are important for:

- Establishing accountability to stakeholders (justifying your program)

- Determining effectiveness of program design and instructional strategies (success indicators)

- Determining program direction and continuation (formative evaluation)

- Determining impact of programs after a time period (summative evaluation)

- Determining the returns on investment (impact)

Evaluation instruments can be quantitative or qualitative in nature, depending on evaluation type and technique used, and the purpose.

Modified Logic Model Framework for Grant-writers

Logic Model, made famous by the University of Wisconsin's Division of Extension (https://fyi.extension.wisc.edu/programdevelopment/logic-models/), utilizes the seven basic assessment levels suggested by Bennett (1975) into a useful visual and planning tool for Extension program developers. In the USDA NIFA model, evaluation is included without specific descriptors (see link to USDA website under references). Figure 1 provides a modification of the ‘Generic Logic Model for NIFA Reporting’ available online for educators and grant writers. This modified Logic Model framework emphasizes evaluation in the planning stage by deeply integrating: 1) Baseline data in the column ‘Inputs’; 2) Extension and Evaluation Activities in the column ‘Outputs’ along with budget allocation, and 3) Emphasizing evaluation plans for short-term and medium-term changes. This modified framework also reminds program developers to focus on capacity building, communication, and marketing plans as it relates to program implementation and process evaluation. The point is that program leaders need not wait for ‘impacts’ to happen – documenting what unfolds during the implementation phase (process evaluation) tells a powerful story! In other words, evaluation should be continuous and standardized across a program.

Figure 1. Modified Logic model emphasizing research, Extension, and evaluation methodology for grant planning.

Although the Logic Model seems to suggest a linear relationship between project outputs and outcomes, when developing grant proposal, the project narrative should suggest the cyclical nature of process and outcome evaluations that is utilization-focused (Patton, 2008). In other words, choose success indicators carefully and measure them consistently over long period of time. Compile and share the data for improving deliverables on a continuing basis instead of ‘storing away’ useful information (Figure 2).

Figure 2. Modern Extension Logic model should emphasize on project monitoring and utilization of evaluation data for improving program deliverables.

Evaluation Standards

According to the University of Wisconsin Program Development and Evaluation Team (Taylor-Powell and Henert, 2008; Milstein and Wetterhall, 1999) and the CDC Program Performance and Evaluation Office (2017), every evaluation must have:

- Utility - satisfy the information needs of specific users (utilization-focus)

- Feasibility - have realistic approach to assessments (participatory-focus)

- Propriety - surveys should be ethical and legal, and respect the privacy of others

- Accuracy - evaluations should provide reliable information that can be archived and used in future.

EVALUATION METHODS

Basic Steps to Extension Evaluation System

One of the fundamental questions asked by new Extension faculty and staff is "How does one plan for evaluation with minimal funding and effort?". Typically, evaluation fund allocation in grants we have developed over the decade ranges from 10 percent at the beginning of project (focused on deliverables and outcomes) to 20 percent at program maturity when impact documentation is the critical function. In other words, evaluation should not be viewed as an ‘expense’, but as an investment to the future of the program. We recommend the following steps to developing a simplistic Extension evaluation system:

Step 1. Initial decision to evaluate. At this step, the project must reflect on the basic educational goals and make a list of measurable behavior changes. Project leaders should keep focus on two to three behavior changes per year and develop a list of success indicators. Questions related to usefulness of publications, sources of information, and barriers to adoption can be included as important outcomes, besides assessment of learning. One or two descriptive impact questions should be combined at the end for evaluating repeat audience.

Step 2. Developing the questionnaire template. Once the success indicators are listed, a basic set of questions ranging from demographic information to learning assessment should be put together. It is a good idea to let other team members or Extension educators review the questions and the response choices to minimize biases. Survey design is an exciting area of current research in the evaluation field and rules of survey design are constantly in a flux. Electronic or paper surveys should follow the evaluation standards (see above) and consider the human elements for design, for example, length of survey, flow of questions, color or visual appeal, etc. For social research surveys, approval from the Institutional Review Board should be considered along with a data management plan. Long-term educational programs in Alabama Extension (such as the Beginning Farmer and Rancher Development Program) have survey templates where 80 percent survey questions have been ‘standardized’ over the years, and 20 percent questions are flexible based on the event. These standardized surveys are reviewed each year and approved by the ACES Commercial Horticulture Extension Team.

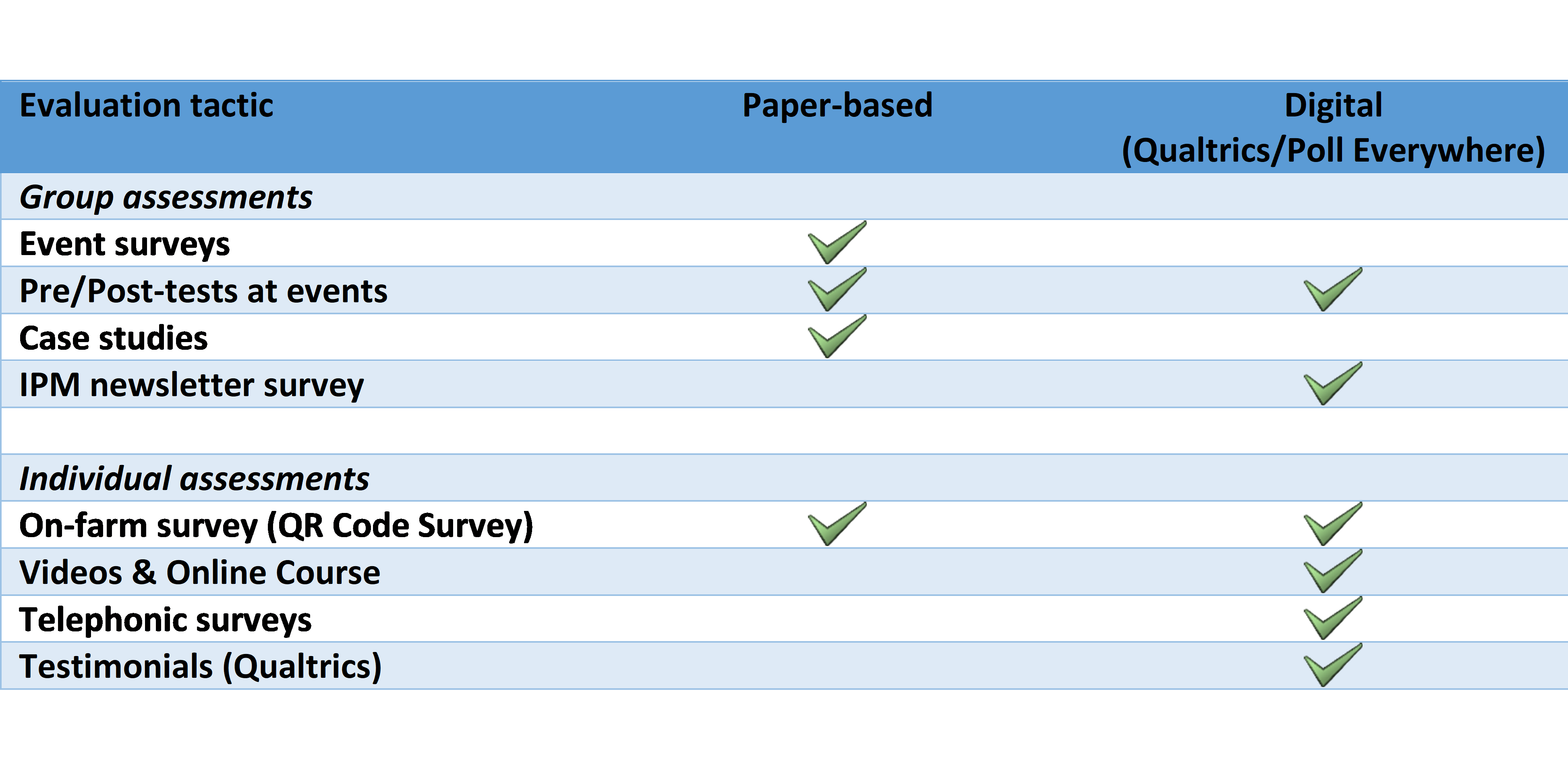

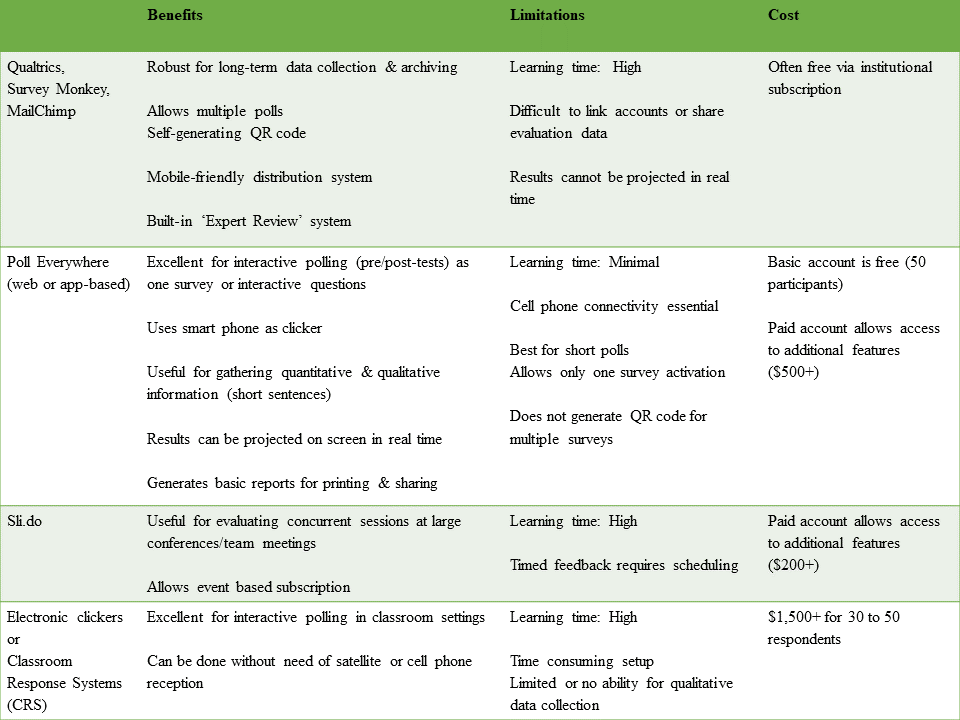

Step 3. Administering the survey. After evaluation becomes a commitment and the survey template is ready, it is time to consider the multitude of survey techniques (Table 1) and technologies (Table 2) available today. This is probably the most exciting part of Extension evaluations that younger app-savvy faculty members seem to enjoy! Remember, that a program may have to develop multiple several templates and utilize multiple techniques for data collection. For example, a paper survey for use at events is excellent for a broad range of quantitative and qualitative questions, whereas electronic polling may not allow for lengthy qualitative feedback. Electronic polling is also dependent on availability of cell phone signal, so plan ahead and make back-up plans.

Step 4. Data visualization and reporting. There are number of excellent books on the topic of data visualization and visual thinking. Two easy-to-read reference books we constantly use were written by Evergreen (2016) and Brand (2017). We also use program timelines or ‘roadmap’ using Microsoft PowerPoint to provide snapshots of major programmatic outputs along with the overall impacts at the bottom (Figure 3). Such roadmaps have been included in many grant proposals by the authors to support a strong narrative and reviewers have always appreciated the additional effort. Illustrated roadmaps can also be useful for sharing long-term evaluation data with legislators and other critical stakeholders who may not want to read lengthy project reports!

Figure 3. Program timeline as a visualization technique can be helpful in grant development process.

Another effective reporting method are 2-3 min videos that have grower testimonial combined with evaluation data or summaries generated from multiple surveys (Figure 4). On-farm videos of producers can be recorded using standard audio-visual equipment at appropriate locations on a farm (pay attention to sound and picture quality when using mobile devices!). Recent videos developed using Biteable.com allowed integration of interview recordings with animated slides. Impact videos should have realistic scenarios, short segments, and simple messages. We used a Canon HD Professional Camcorder with a lavalier clip microphone for recording on-farm interviews with high audio and video quality. Such video reports are undeniable evidence of Extension impacts that can lead to a substantial body of testimonials over time. In the reference section, we have linked to the ACES ‘Beginning Farmer Project’ playlist on YouTube where many impact videos are available for review. Since 2013, we have developed 11 impact videos that have received over 4,000 views from stakeholders and producers looking for motivation!

Figure 4. Short impact video report using evaluation data combined with client testimonial is a very useful reporting system.

Evaluation Techniques & Available Technologies

Table 1 provides a general list of Extension evaluation techniques used by agricultural Extension professionals to determine effectiveness of training programs (Taylor-Powell, 2002; McNamara, 2017). The techniques are divided into group assessment and individual assessment tactics for simplicity. Extension professionals can use this chart for selecting evaluation techniques that suit their program per the audience and resource availability. Remember to do some evaluations at third-party events along with data collected to reduce biases. Data collected from multiple sources using appropriate technique should converge and show consistency in trends. Review the survey questionnaires and technique/s periodically.

Table 1. Some Common Extension Evaluation Techniques for Determining Needs, Processes (Outcomes) and Impacts.

Table 2 provides a basic comparison of many different electronic polling software frequently used in Extension. The use of electronic clickers have waned in popularity with the advent of smart phones. Mobile apps are becoming increasingly popular for polling for live or remote audience. In Table 2, we have also indicated the approximate learning time for software. For example, Poll Everywhere can be used extensively for pre/post-tests during educational events with relative ease. Poll everywhere can collect qualitative information using smart phones; it also does cloud-based computing that can be projected to a live audience. Note that pre/post-test questions should be designed keeping in mind the actual educational content and open-ended questions should be avoided. Images can be incorporated into the polls making them visually appealing to the audience and reducing the reading load on screen.

Qualtrics surveys are used extensively for long-term data collection with the major advantage of multi-location polling. Self-generated quick response (QR) codes in Qualtrics can be embedded in slides or hand-outs for evaluating distance training workshops. Qualtrics and Survey Monkey are also useful for impact evaluations where follow-up and piping are useful. ACES Commercial Horticulture Program team uses a business card with a QR code that is used for quick impact reporting after an on-site visit. The major issue with all digital methods is accessibility to the survey (mainly poor cellphone reception). Remember to check location of event (avoid basement rooms or metal buildings) and have back-up paper copies of surveys. Provide adequate response time to participants and encourage participation by informing them about past feedback and program improvements!

Table 2. Comparison of Digital Survey Technologies (click to enlarge).

CONCLUSIONS/FINAL THOUGHTS

In short, evaluation can be fun and informative for the program leader or event organizers and engaging for the audience. Here are some recommendations for frequently-asked evaluation questions received during evaluation-capacity building workshops completed over the years.

About Evaluation Response Rates

One of the most frustrating aspects of Extension evaluation is the lack of participation and engagement. Unlike public education and health programs where participants are monitored over a very long period of times, rural Extension programs have to deal with high attrition rates and low response number/motivation.

The Alabama Extension IPM, Beginning Farmer and Rancher Development (BFRD), and the Alabama Sustainable Agriculture Research and Education (SARE) Programs have completed over 100 paper-based, face-to-face, and digital surveys in the past ten years. Currently, the response rate to paper-based surveys is about 60 percent. The response rate to email surveys is historically low with industry average being 19 to 20 percent (MailChimp); the BFRD e-newsletter survey has a 21 percent response rate. Consistent evaluation strategy has led to a large data set that provides a reliable estimate of short-term behavioral changes and long-term impacts.

Based on numerous feedback, here are some overall strategies for improving Extension survey response rates:

- Response rates can be improved by providing adequate time in the event agenda so that participants are aware of the feedback system. Verbally discuss and then prepare audience for using phones for electronic polls. Do not rush the evaluation!

- Review questions and response options periodically with other educators to make the surveys shorter with appropriate emphasis on outcome and impact queries (refer to the evaluation standards). Pay attention to survey design and visual appeal. Print surveys in color on good quality paper or postcards. Thank the audience for feedback!

- Rewards or incentives for surveys can improve feedback in the short-term; however, absence of rewards can also lead to contraction in responses. So, tread carefully on this issue and have good judgment.

- Inform the audience how the aggregate data will be utilized for programmatic improvements (this is called empowerment evaluation by Fetterman, 2005).

- Use the friendliest producers from event surveys for on-farm video impact reports. These can also be the producers you provided direct service. This effort can give you the best response rate!

Developing a Culture of Evaluation in Extension

Program evaluation is like a lens that brings a sharper focus to understand programmatic successes and challenges. It requires individual- and organizational-level commitment for long-term success. Grant writers developing integrated research and education grants should allocate a significant budget for educational methodology, communication/marketing, and evaluation plans that can be ‘standardized’ across program areas or Extension teams. For grant developers, evaluation should be planned with capacity building efforts in mind along with cost-effectiveness. The evaluation budget should include estimates of personnel wages, equipment cost, and travel expenditure that can be substantially high for on-farm impact interviews and video production. Event evaluations are the low-hanging fruits and such opportunities should not be lost. Remember to utilize various digital and traditional evaluation techniques with consistency – this will make your evaluation plan practical and fun for the team. Share evaluation findings through visuals or videos to motivate team members or clientele. Share videos via blog articles and social media for attracting new audience. For evaluation training, we encourage you to become a member of the American Evaluation Association or a regional evaluation association for professional development and networking opportunities. Keep discussing and implementing program evaluation as a team commitment instead of an individual burden!

REFERENCES

Alabama Cooperative Extension System (ACES). Beginning Farmer Project Video Playlist. Retrieved on March 9, 2020. https://www.youtube.com/playlist?list=PLkNoAmOtt___MKj6IBxvWzOdWP0btBq4D

Allen, W. (2009). Learning for sustainability [formerly NRM-Changelinks (1998-2006)]. [Online] www.learningforsustainability.net. Accessed on May 7, 2009.

Anonymous. (2017). A framework for program evaluation. Centers for Disease Control and Prevention, Program Performance and Evaluation Office, Atlanta, GA. https://www.cdc.gov/eval/framework/index.htm

Anonymous. (2020). Generic Logic Model for NIFA Reporting. https://nifa.usda.gov/sites/default/files/resource/Generic%20Logic%20Model%20for%20NIFA%20Reporting.pdf

Bennett, C. (1975). Up the hierarchy. Journal of Extension. https://www.joe.org/joe/1975march/1975-2-a1.pdf

Brand, W. (2017). Visual thinking: Empowering people and organizations through visual collaboration. BIS Publishers, Amsterdams, The Netherlands.

Braverman, M.T., and Engle, M. (2009). Theory and rigor in Extension program evaluation planning. Journal of Extension. 47(3): v47-3a1. https://joe.org/joe/2009june/a1.php

Evergreen, S. (2016). Effective data visualization: The right chart for the right data. SAGE, California.

Fetterman, D.M. (2005). Empowerment evaluation principles in practice. Empowerment Evaluation Principles in Practice (D.M. Fetterman and A. Wandersman, editors). Guilford Publications, NY. https://www.guilford.com/excerpts/fetterman.pdf?t

Ghimire, N.R., and Martin, R.A. (2011). The educational process competencies: Importance to Extension Educators. Education Research Journals. 1(2): 14-23.

Kalambokidis, L. (2004). Identifying the public value in Extension programs. Journal of Extension. 42(2). 2FEA1. https://www.joe.org/joe/2004april/a1.php

Kluchinski, D. (2014). Evaluation behaviors, skills and needs of cooperative Extension agricultural and resource management field faculty and staff in New Jersey. Journal of the NACAA. 7 (1). https://www.nacaa.com/journal/index.php?jid=349

McNamara, C. (2017). Field guide to nonprofit program design, marketing and Evaluation (5th edition). Authenticity Consulting, Minneapolis, MN.

Osborne, D., and Gaebler, T. (1992). Re-inventing Government. Plume, NY.

Patton, M.Q. (2008). Utilization-focused evaluation. SAGE Publications, California.

Radhakrishna, R., and Brown, C.F. (2010). Viewing Bennett's hierarchy from a different lens: Implications for Extension program evaluation. Journal of Extension. 48(6): v48-6tt1. https://joe.org/joe/2010december/tt1.php

Stup, R. (2003). Program evaluation: Use it to demonstrate value to potential clients. Journal of Extension. 41(4): 4COM1. https://joe.org/joe/2003august/comm1.php

Taylor-Powell, E., and Henert, E. (2008). Developing a Logic Model: Teaching and training guide. Program Development and Evaluation, University of Wisconsin-Extension. https://fyi.extension.wisc.edu/programdevelopment/files/2016/03/lmguidecomplete.pdf